SQL Interview Questions and Answers - Part 18

Are you preparing for the SQL Interview? If yes, then you are at the right place. This is the SQL Interview Questions and Answers article series. Here we will see the Top 100+ SQL Interview Questions with Answers.

Please visit our YouTube Channel for Interviews and other videos by below link:

Please read the complete Design Pattern, C#, MVC, WebAPI, and .Net Framework Interview Questions and Answers article series here.

Introduction

This is the 18th part of the SQL Interview Questions and Answers article series. Each part contains eight to ten SQL Interview Questions with Answers. Please read all the SQL Interview Questions list here.

I will highly recommend to please read the previous parts over here before continuing the current part:

SQL Interview Questions and Answers - Part 18

Q161. What is Concurrency in SQL? What are the different concurrency problems in SQL?

Concurrency is a situation that arises in a database due to the transaction process. Concurrency occurs when two or more than two users are trying to access the same data or information at the same time.

Users who access a resource at the same time are said to be accessing the resource concurrently. Concurrent data access requires mechanisms to prevent adverse effects when multiple users try to modify resources that other users are actively using.

Concurrency Problem or Concurrency Effects:

In SQL concurrency is considered a problem because accessing data simultaneously by two different users can lead to inconsistent results or invalid behavior.

The concurrency problem mostly arises when multiple users try to write the same record, or when one is writing and the other is reading the same record.

Users modifying data can affect other users who are reading or modifying the same data at the same time. These users are said to be accessing the data concurrently.

If a data storage system has no concurrency control, users could see the following side effects:

- Dirty Reads

- Phantom Reads

- Non-Repeatable Reads

- Lost Updates

Q162. What is a Dirty Read Problem in SQL? How can you avoid this Dirty Read Anomaly?

The Dirty Read Problem occurs when another process reads the changed, but uncommitted data. So reading uncommitted data cause a dirty read anomaly in SQL. For example, if one process has changed data but has not committed it yet, another process is able to read the same data. This leads to an inconsistent state for the reader.

It is also known as an Uncommitted Read Issue. Uncommitted dependency occurs when a second transaction selects a row that is being updated by another transaction. The second transaction is reading data that has not been committed yet and may be changed by the transaction updating the row.

Q163. What is a Phantom Read Problem in SQL? How can you avoid this Phantom Read Anomaly?

A Phantom Read is a situation that occurs when two identical queries are executed and the collection of rows returned by the second query is different.

If two similar queries are executed by two different users, and it shows different outputs, then it would be a Phantom Read problem.

The two SELECT statements in the first transaction may return different results because the INSERT statement in the second transaction changes the data used by both.

For Example: If user A executes the SELECT query to read some data from a table, at the same time another user B INSERT some new data. But user A only able to read the old data at the first attempt, but when user A re-runs the same query then he gets a different set of data.

Q164. What is a Non-Repeatable Read Problem in SQL? How can you avoid this Non-Repeatable Read Problem of SQL?

The Non-Repeatable Reads occurs when the second transaction accesses the same row several times and reads different data each time. This problem occurs when one process is reading the data, while another process is writing the data at the same time.

In non-repeatable reads, the first process reading the value might get two different values, as the changed data is read a second time because the second process changes the data.

It is similar to the dirty read problem in that another transaction is changing the data that a second transaction is reading. However, in Non-repeatable Reads, the data read by the second transaction was committed by the transaction that made the change.

Also, in the Non-Repeatable issue multiple reads (two or more) of the same row, and each time the information is changed by another transaction; thus, the term nonrepeatable read.

Q165. What is a Lost Update Problem in SQL? How can you avoid this Lost Update Problem of SQL?

The Lost Update Problem is also known as Missing Update Problem or Double Updates Problem. This problem occurs when two processes try to manipulate the same data simultaneously. This problem can lead to data loss, or the second process might overwrite the first process change.

Transactions that are running at the READ UNCOMMITTED isolation level do not issue shared locks to prevent other transactions from modifying data read by the current transaction.

Transactions that are running at the READ COMMITTED isolation level do issue shared locks, but the row or page locks are released after the row is read.

Q166. How can you solve the Concurrency Problems in SQL? What are the different Concurrency Control Mechanisms available in SQL?

When multiple persons attempt to modify data in a database at the same time, a system of controls must be implemented so that modifications made by one person do not adversely affect those of another person. This is called concurrency control.

Concurrency control theory has two classifications for the methods of instituting concurrency control. These all the 5 isolation levels work on these two major concurrency control models:

Pessimistic Concurrency Control:

In pessimistic concurrency control, the readers can block writers, and the writers can block readers.

A system of locks prevents users from modifying data in a way that affects other users. After a user performs an action that causes a lock to be applied, other users cannot perform actions that would conflict with the lock until the owner releases it.

This is called pessimistic control because it is mainly used in environments where there is high contention for data, where the cost of protecting data with locks is less than the cost of rolling back transactions if concurrency conflicts occur.

The Read Uncommitted, Read Committed, Repeatable Read, and Serializable Isolation Levels come under the pessimistic concurrency model.

Optimistic Concurrency Control:

In optimistic concurrency control, the readers cannot block writers, and the writers cannot block readers, but the writer can block another writer.

In optimistic concurrency control, users do not lock data when they read it. When a user updates data, the system checks to see if another user changed the data after it was read.

If another user updated the data, an error is raised.

Typically, the user receiving the error rolls back the transaction and starts over. This is called optimistic because it is mainly used in environments where there is low contention for data, and where the cost of occasionally rolling back a transaction is lower than the cost of locking data when read.

Snapshot Isolation Level comes under the optimistic concurrency model

Q167. What is Transaction Isolation? What are the different Transaction Isolation Levels in SQL?

Transactions specify an isolation level that defines the degree to which one transaction must be isolated from resource or data modifications made by other transactions.

SQL Server supports a range of concurrency control. Users specify the type of concurrency control by selecting transaction isolation levels for connections.

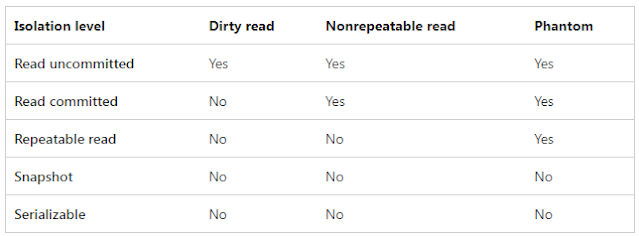

SQL Server provides 5 different levels of transaction isolation to overcome concurrency problems. When we connect to a SQL server database, the application can submit queries to the database with one of five different isolation levels:

- Read Uncommitted Isolation Level

- Read Committed Isolation Level

- Repeatable Read Isolation Level

- Serializable Isolation Level

- Snapshot Isolation Level

Out of these five isolation levels, Read Uncommitted, Read Committed, Repeatable Read, and Serializable come under the pessimistic concurrency model. Snapshot comes under the optimistic concurrency model.

These attributes can be defined using Transact-SQL statements or through the properties and attributes of database application programming interfaces.

Isolation levels are described in terms of which concurrency side-effects, such as dirty reads or phantom reads, are allowed. These levels are ordered in terms of the separation of work by two different processes, from minimal separation to maximal.

Choosing a transaction isolation level does not affect the locks acquired to protect data modifications. A transaction always gets an exclusive lock on any data it modifies, and holds that lock until the transaction completes, regardless of the isolation level set for that transaction.

A lower isolation level increases the ability of many users to access data at the same time but increases the number of concurrency effects (such as dirty reads or lost updates) users might encounter.

Conversely, a higher isolation level reduces the types of concurrency effects that users may encounter, but requires more system resources and increases the chances that one transaction will block another.

Choosing the appropriate isolation level depends on balancing the data integrity requirements of the application against the overhead of each isolation level.

The highest isolation level, serializable, guarantees that a transaction will retrieve exactly the same data every time it repeats a read operation, but it does this by performing a level of locking that is likely to impact other users in multi-user systems.

The lowest isolation level, read uncommitted, may retrieve data that has been modified but not committed by other transactions. All of the concurrency side effects can happen in read uncommitted, but there is no read locking or versioning, so overhead is minimized.

Q168. What is the difference between the Read Committed and Read Uncommitted Isolation Levels in SQL?

Read Uncommitted Isolation Level:

Read Uncommitted is the lowest isolation level where transactions are isolated only enough to ensure that physically corrupt data is not read. It is the first level of isolation, and it comes under the pessimistic model of concurrency.

In Read Uncommitted, one transaction is allowed to read the data that is about to be changed by the COMMIT of another process. So one transaction may see not-yet-committed changes made by other transactions. Hence the Dirty Read Problem exists in this isolation.

Read Committed Isolation Level:

This is the default Isolation Level of the Database Engine. This is the second level of isolation and falls under the pessimistic model of concurrency.

In the Read Committed isolation level, we are only allowed to read data that is committed. This level eliminates the dirty read problem.

In this level, if you are reading data then the concurrent transactions that can delete or write data, some work is blocked until other work is complete.

It allows a transaction to read data previously read (not modified) by another transaction without waiting for the first transaction to complete. The Database Engine keeps write locks (acquired on selected data) until the end of the transaction, but read locks are released as soon as the SELECT operation is performed.

Q169. What is the Repeatable Read Isolation Level in SQL?

The Database Engine keeps read and write locks that are acquired on selected data until the end of the transaction. The Repeatable Read isolation level is similar to the Read Committed level and eliminates the Non-Repeatable Read problem.

In this isolation level, the transaction has to wait till another transaction's update or read query is complete. However, because range locks are not managed, phantom reads can occur. This means if there is an INSERT transaction, it does not wait for anyone. This can lead to the Phantom Read problem.

Q170. What is the difference between Serializable and Snapshot Isolation Levels in SQL?

Serializable Isolation Level:

Serializable is the highest level of isolation in the pessimistic model. It is the highest level where transactions are completely isolated from one another.

By implementing this level of isolation, we can prevent the Phantom Read problem. In this level of isolation, we can ask any transaction to wait until the current transaction completes.

The Database Engine keeps read and write locks acquired on selected data to be released at the end of the transaction. Range locks are acquired when a SELECT operation uses a ranged WHERE clause, especially to avoid phantom reads.

Snapshot Isolation Level:

SQL Server also supports two additional transaction isolation levels that use row versioning. One is an implementation of read committed isolation, and one is a transaction isolation level, snapshot.

Snapshot follows the optimistic model of concurrency, and this level of isolation takes a snapshot of the current data and uses it as a copy for the different transactions.

1. Read Committed Snapshot Isolation:

READ_COMMITTED_SNAPSHOT ON:

When the READ_COMMITTED_SNAPSHOT database option is set ON, read committed isolation uses row versioning to provide statement-level read consistency. Here the Database Engine uses row versioning to present each statement with a transactionally consistent snapshot of the data as it existed at the start of the statement.

Read operations require only SCH-S table-level locks and no page or row locks. Locks are not used to protect the data from updates by other transactions.

READ_COMMITTED_SNAPSHOT OFF:

When the READ_COMMITTED_SNAPSHOT database option is set OFF, which is the default setting, read committed isolation uses shared locks to prevent other transactions from modifying rows while the current transaction is running a read operation.

The shared locks also block the statement from reading rows modified by other transactions until the other transaction is completed. Both implementations meet the ISO definition of read committed isolation.

2. Snapshot Isolation Level:

The snapshot isolation level uses row versioning to provide transaction-level read consistency. Read operations acquire no page or row locks; only SCH-S table locks are acquired.

When reading rows modified by another transaction, they retrieve the version of the row that existed when the transaction started.

You can only use Snapshot isolation against a database when the ALLOW_SNAPSHOT_ISOLATION database option is set ON. By default, this option is set OFF for user databases.

SQL Server does not support the versioning of metadata. For this reason, there are restrictions on what DDL operations can be performed in an explicit transaction that is running under snapshot isolation.

So ALTER TABLE, CREATE INDEX, CREATE XML INDEX, ALTER INDEX, DROP INDEX, DBCC REINDEX, ALTER PARTITION FUNCTION, ALTER PARTITION SCHEME, or any CLR DDL statement are not allowed under snapshot isolation after a BEGIN TRANSACTION statement.

To Be Continued Part-19...

Recommended Articles

Thanks for visiting this page. Please follow and join us on LinkedIn, Facebook, Telegram, Quora, YouTube, Twitter, Pinterest, Tumbler, and VK for regular updates.

No comments:

Post a Comment

Please do not enter any HTML. JavaScript or spam link in the comment box.